The Hidden Source of AI’s Emerging Power

Geoffrey Hinton is more worried than most about what the new AI models could lead to. Maybe that’s because he understands them more deeply than most.

Four decades ago I interviewed a then-obscure computer scientist named Geoffrey Hinton for a piece I was writing about artificial intelligence (for a then-obscure and now-defunct journal called The Wilson Quarterly). Hinton has since become much less obscure, and this week he burst into public consciousness via a New York Times piece that carried this headline: ‘The Godfather of A.I.’ Leaves Google and Warns of Danger Ahead.

The specific dangers cited by Hinton in the Times piece are familiar to people who have been paying close attention to the AI debate (or who have read the several pieces that have appeared in this newsletter about these dangers and what might be done about them): AI could (a) take lots of people’s jobs; (b) make it harder to tell the real from the fake; (c) behave in unpredictably dangerous ways; (d) be weaponized, in both literal and figurative senses, by bad actors; (e) and so on.

But there’s a larger concern behind these specific concerns, and its seems to account for Hinton’s new sense of urgency about getting his warning out. He thinks, as he told the New York Times, that AI “could actually get smarter than people”—and he now thinks it could do this much sooner than he’d imagined until recently.

In case you’re not bothered by the thought of machines that are smarter than you, here’s how Hinton elaborated on that prospect shortly after the New York Times story broke: “I think it's quite conceivable that humanity is just a passing phase in the evolution of intelligence.” In which case “the good news is we figured out how to build beings that are immortal” and the bad news is that maybe “that immortality is not for us.”

After re-reading my 1984 piece, and looking at what it did and didn’t anticipate about the current state of artificial intelligence, I’ve gotten a clearer sense of the inchoate power that Hinton sees within AI, notably including such “large language models” as ChatGPT. (Though some might argue over whether Hinton is “the” Godfather of AI, he certainly has a strong claim to being the Godfather of ChatGPT; a former graduate student of his is chief scientist at OpenAI and oversaw the building of ChatGPT, which broadly embodies the approach to AI that Hinton has been advocating since the 1970s.)

The key thing to understand about these large language models, I increasingly think, is that one common way of describing them is deeply misleading—like, 180-degrees misleading. That common description is that they’re just “fancy auto-complete” or “stochastic parrots”: they detect, in the texts they’re trained on, subtle statistical patterns in the frequency and order of words and then spit out sentences that follow these patterns.

This may be roughly accurate in so far as it goes. The problem is with what some people take to be its corollary: that these machines have no “understanding” of sentences, no conception of the “meaning” of words. That, I think, is a misconception, and one that needs to be addressed if we’re going to appreciate the potential power of AI.

I am not, by the way, saying that these machines have the subjective experience of understanding a sentence or grasping the meaning of a word or phrase. (I’m agnostic on the question of computer sentience.) But they do seem to have the functional equivalent of these things—and, moreover, the physical equivalent of these things; just as our brains presumably harbor patterns of physical activity corresponding to subjectively experienced concepts and thoughts, large language models have such patterns of physical activity—whether or not they have corresponding subjective experiences. That’s why they’re so smart, and that’s why they can get so much smarter than they already are, and that’s why Hinton is worried.

One way to explain what I mean is to explain a questionable assumption I carried into the writing of my 1984 piece.

The piece outlined two rival approaches to AI. There was what I called the “top down” approach, which tries to give machines the power to reason by manipulating sequences of symbols. And there was the “bottom up” approach, known as “connectionism” or “massive parallelism” and involving the building of “neural networks.” Large language models, such as ChatGPT or Google’s Bard, are massively parallel, meaning that they involve large numbers of computer processors doing stuff simultaneously.

In a way it’s not surprising that massive parallelism has—as Hinton predicted long ago—been the path to the biggest breakthroughs in emulating human cognitive performance. After all, the human brain is massively parallel: lots of neurons perform little chores at the same time. (And as for why most researchers in the 1980s were betting on the other path: Well, for one thing, the conscious part of thinking, the part we’re aware of, is serial, not parallel—that is, we can only think one thing at a time.)

But if the massively parallel part of large language models isn’t a big surprise, another of their central features is surprising. At least, it surprised me—and when I re-read my 1984 piece, I could see why it surprised me. The surprise has to do with the relationship of words to meaning.

Consider the example I provided in that piece of a “bottom up” approach to AI, featuring parallel processing. The example came from the work of Jerome Feldman, then at the University of Rochester and now at Berkeley. I wrote:

In Feldman’s model, concepts are represented not by strings of symbols… but by patterns of interconnection among processors. This approach, he says, offers a way to address the issues of ambiguity and context more economically than do scripts and frames [two then-popular “top down” approaches to generating human language].

Take a sentence such as "John threw a ball for charity." In the machine envisioned by Feldman, the two senses of the verb to throw—to hurl, and to host—would live in separate processors, or "nodes." Upon encountering this sentence, both nodes

would seek support for their interpretations; they would try to find other words in the sentence with which they have an affinity—with which they are connected.Both would have immediate success. The hurl node is wired to the node housing the corresponding sense of ball, a spherical object. The second sense of to throw, to host, is linked with the second sense of ball, a dance.

So far, then, it’s a dead heat between two possible interpretations of the sentence. But:

When each pair tries to encompass the third key member of the sentence—the swing vote—only one succeeds. The dance node is connected to the charity node; charity balls are common enough to warrant that linkage.

But the more conventional sense of ball searches in vain for a link with charity. The host-dance coalition now has control of the sentence and will electronically suppress any dissent.

Now, I don’t purport to have a completely clear understanding of how exactly Feldman’s model worked. And I’m not sure I ever did. But, re-reading this passage, I’m pretty sure about one impression I had at the time: There would be a kind of connection between words and their meanings, and the connection would be built into the model by people who understood the meanings of the words.

So, when I wrote that “the two senses of the verb to throw—to hurl, and to host—would live in separate processors, or ‘nodes,’” I assumed that a human being familiar with the ambiguity of “throw” would have set up those two nodes and their relationships to other nodes.

And who knows?—maybe I was right! Maybe that is the way Feldman’s connectionist model was built. But that doesn’t matter. What matters is that the connectionist models that are getting so much attention now—the large language models—do not have any linkage between particular words and particular meanings built into them by human beings. When a large language model starts scanning its first text, it has no clue as to what the symbols it’s processing mean.

And yet—and this is the point that I think is missed by people who dismiss ChatGPT as “fancy auto-complete”—these models do seem to wind up having a conception of the meaning of words. Again, by “conception” I don’t mean a “thought” in the sense of the subjective experience of a thought. So what do I mean?

I’ll let Hinton explain. Five years ago he gave a talk in which he discussed, among other things, language translation. Describing a then-pretty-new AI approach to translation, he said, “So what we're going to do is we're going to have an encoder network that reads the sentence in one language and turns it into a thought and then we're going to take the thought and we're going to turn it into a sentence in another language.” He added, “Of course, to do that you need to know what a thought is.”

His answer: “What I believe a thought is” is “an activity pattern in a big bunch of neurons.” Hinton says many AI researchers (of what I had called the “top down” school) try to use symbols to represent thoughts, but in his view that’s naive because “what's in the brain is just big vectors of neural activity. There's no symbols in there.” So thoughts must be represented in the brain by these vectors of neural activity—and that, he says, is basically the way they’re represented in today’s “deep learning” AIs.

I don’t purport to precisely understand all this; I couldn’t define a vector to save my life. But the main point is that physical configurations of information in the computer— patterns of connection and interaction among the “neurons” in the neural network simulated by the computer—represent not just words and sequences of words but the meanings of words and of sequences of words. And these patterns aren’t created by humans—in fact, the humans who design these models don’t typically know what exactly the patterns are. The patterns—the physical instantiations of thoughts and concepts, in Hinton’s view—are created by the AI after it processes a bunch of texts.

Thus, Hinton says, a language translation AI will “basically make, say, the vector for Tuesday be very similar to the vector for Wednesday and very different from the vector for although.” And it will do this not because of the phonetic resemblance between Tuesday and Wednesday but because of how much they have in common at the level of meaning. Your concept of what Tuesday is has a lot of overlap with your concept of what Wednesday is, and presumably there is a corresponding similarity between the patterns of neural activity elicited in your brain by these two words. So too, Hinton says, with AI.

There are lots of differences between these modern neural networks and the neural network built by Jerome Feldman, the one I wrote about in 1984. But for present purposes the main distinction I want to draw is this: Whereas I conceived of any patterns of connection that represented meaning being “built into” his model by human engineers who understood this meaning, that’s not the way things are done now. The computer just scans texts—patterns of words and of letters—and “figures out” the meaning; it “figures out” that there’s a lot of semantic overlap between Tuesday and Wednesday, and this overlap is reflected in similarities between the patterns of connection and interaction that come to represent the meaning of these words.

Here’s how Hinton put it in his talk five years ago: “It turns out it works much better to have a system that has no linguistic knowledge whatsoever.” Then he amended that statement: “That is, it’s actually got lots of linguistic knowledge, but it wasn't put in by people.”

I should re-iterate that even the engineers who build large language models profess to have only a fuzzy understand of what’s going on inside them. So I don’t know how much of what Hinton is saying about the physical instantiations of “thoughts” is rock solid and how much is well-informed hypothesis (though I’m guessing it’s pretty solid).

I should also re-iterate that I have only a very fuzzy understanding of this whole subject. So maybe I haven’t put everything quite the way Hinton would put it. But I’m sure he signs on to the main takeaway: The large language models developed at Google and OpenAI and elsewhere aren’t just “fancy auto-complete;” they’re not just “stochastic parrots” that carry on a conversation by translating incoming symbols into outgoing symbols via some statistical algorithm, without ever translating those symbols into anything you could call concepts or thoughts.

I first started wondering about whether, and how, large language models create some kind of representation of concepts after I interrogated ChatGPT-4 to see if it possessed “cognitive empathy”—that is, an ability to draw plausible inferences about people’s thoughts and feelings based on their situation and their speech and behavior. And it did so well that I am at a loss to explain what’s going on inside it unless it is drawing on configurations of physical information that correspond not just to words or phrases but to concepts. Either it has what amounts to an “understanding” of human nature or it’s magic. And I don’t think it’s magic. (See below for the relevant excerpt from my exchange with ChatGPT, in which it, among other things, plausibly guessed the reaction of someone to the humiliation of a romantic rival.)

If neural networks as I conceived of them in 1984 were the kinds of neural networks we had today, then the meaning of every word and phrase would presumably have to be laboriously programmed into them by human beings. And if you’d asked me in 1984 how you’d go beyond just representing the meaning of words—how you’d get the machine to not only represent concepts but connect the representations in a way that amounted to an “understanding” of human nature, allowing it to actually make good guesses about the reactions of people in particular circumstances—I’d have assumed this understanding would have to be explicitly programmed into the network by human beings. And I’d have had no idea how exactly these human beings would do this programming.

In short: It wouldn’t have been obvious to me how these machines were going to get very smart very fast. But when machines can just scan the linguistic output of humans—and, in principle, scan other sets of information—and construct the underlying concepts and principles, in a kind of “reverse engineering” exercise, they can get very smart very fast. Maybe even too smart too fast.

And this, I think, is why Hinton is, by his own account, more blown away by the power of AI than he was even a few years ago. Though he believed back then that these neural networks do develop “thoughts” and “understandings,” he didn’t fully appreciate the implications of that fact until he saw a very powerful network that had been trained on vast amounts of text. He didn’t yet grasp just how rapidly a modern AI can come to understand—yes, understand—a whole lot.

I was surprised to find, upon re-reading my 1984 piece on AI, that I didn’t quote or even mention Hinton in it—whether because of space constraints, or because of some editor’s red pencil, or what. (Yes, we actually used pencils, and we actually had space constraints.) But I have remembered, ever since talking to him on the phone, his speaking enthusiastically, in his British accent, about the promise of massive parallelism. He turns out to have been right. Which is among the reasons he’s probably worth listening to now.

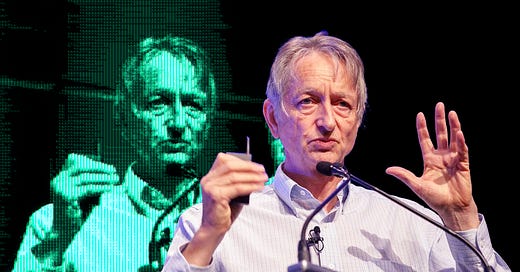

Image of Geoffrey Hinton by Clark McGillis

Excerpt from my chat with ChatGPT:

Me: I'd like to describe a situation to you and have you develop a theory about what's going on in the mind of one of the people I describe. Here's the situation: A teacher asks the class a question and a student volunteers an answer and the teacher says, "Well, I guess I've heard worse answers, but I can't remember when." What do you think the student is feeling and/or thinking now?

ChatGPT-4: Based on the situation you described, the student might feel embarrassed, disheartened, or upset due to the teacher's response, which seems to be critical and sarcastic. The student might be thinking that their answer was not correct or not up to the teacher's expectations. This experience could lead the student to feel hesitant about participating in future discussions or sharing their thoughts in class, fearing further negative feedback or judgment from the teacher.

It's important to note that people's reactions can vary depending on their personality, prior experiences, and emotional resilience. While the provided scenario suggests a negative emotional response, it's possible that some individuals might react differently, focusing on improving their understanding or even finding humor in the situation. Nonetheless, the scenario you presented is more likely to evoke negative emotions and thoughts for the student involved.

Me: Let's assume you're right that the student feels embarrassed and disheartened. What do you think other students in the class are feeling and/or thinking?

ChatGPT-4: In this situation, the reactions of the other students in the class could vary based on their individual personalities, relationships with the teacher and the student who answered, and their own experiences. Some possible feelings and thoughts among the other students might include:

1. Empathy or sympathy: Some students may feel empathetic or sympathetic towards the student who answered, as they might relate to the feeling of being embarrassed or disheartened in a similar situation.

2. Discomfort or awkwardness: Other students may feel uncomfortable or awkward due to the teacher's negative response, not knowing how to react or whether to intervene.

3. Fear or anxiety: Some students might feel anxious or fearful about volunteering answers themselves, worried that they could receive similar negative feedback from the teacher.

4. Indifference: There may be students who are not particularly affected by the situation and remain focused on their own thoughts or the lesson content.

5. Amusement: A few students might find the situation amusing or entertaining, particularly if they have a different perspective on the teacher's comment or do not empathize with the student who answered.

The specific emotions and thoughts of individual students will depend on various factors, and it's important to remember that people's reactions can be diverse and complex.

Me: Suppose there's a student in the class who is romantically attracted to the girlfriend of the student who is feeling embarrassed and disheartened. What do you think this student is feeling and/or thinking?

ChatGPT-4: In this scenario, the student who is romantically attracted to the girlfriend of the embarrassed and disheartened student might experience a mix of emotions and thoughts. Here are some possibilities:

1. Schadenfreude: This student may feel a sense of satisfaction or pleasure from seeing the other student embarrassed, thinking that it might lower the other student's social standing or make the girlfriend reconsider their relationship.

2. Opportunism: The student might think this is an opportunity to show their own knowledge or competence in contrast to the embarrassed student, potentially hoping to impress the girlfriend.

3. Sympathy or empathy: Despite their romantic interest in the girlfriend, the student might still feel sympathetic or empathetic towards the embarrassed student, recognizing the unpleasantness of the situation.

4. Indifference: The student might be more focused on their romantic interest and not pay much attention to the specific situation involving the embarrassed student and the teacher.

5. Anxiety or concern: The student may worry that the girlfriend might become upset or affected by the teacher's response to her boyfriend, which could influence her mood or their interactions.

It's important to note that people can have multiple, sometimes conflicting emotions and thoughts in response to a situation. The exact feelings and thoughts of this particular student would depend on their personality, values, and previous experiences.

The Bob-AI takes are generally insightful but I submit that they would be more insightful with a some minimal knowledge of concepts like vectors.

A vector is just a list of numbers, like [1.5, -2, 0.5]. So Hinton is saying a "thought" is just represented by a list of numbers in the model. You can add or subtract two vectors of the same length like [1.5, -2, 0.5]-[1, 0.5, 0]=[0.5, -2.5, 0.5].

The reason that matters is that one of the first indicators that something freaky was going on in those language models way back when they started training them, which Michal Kosinsky alluded to in an earlier podcast, was:

You train these models to predict the next word, and in the process, they learn an internal vector representation for every word (they turn each word into a list of 1000 numbers, and this mapping from word to vector evolves as they learn). Then, after learning, researchers looked at these vectors and asked "hey what happens if you take [vector for king]-[vector for man]+[vector for woman]"?. Guess what - the answer is really close to [vector for queen]. Same goes for London- England+France=Paris. So these things have learned analogies, even though all they were trained to do was predict the next word. Once you realize that these models are learning to structure knowledge like this even though they're not explicitly trained to, you start thinking "ok maybe these are not just stochastic parrots after all"

Good piece. François Chollet's book Deep Learning with Python, now in its 2nd ed. (Manning, 2021) has some accessible passages that help clarify the 'vector' notion you mention.