Our First Trump 2.0 Test (and How to Pass It)

Plus: Israel cooks lame duck. AI hits wall? Biden goes nuclear. US backs cybercrime treaty. Trump-Tehran rapprochement? Election denial goes bipartisan. And more!

Happy second week of the Trump 2.0 era—it’s been eventful! In last week’s sermon, I stressed the value of staying calm amid Trump turmoil and not overreacting to Trump’s provocations. But let’s face it: The man does give us reasons for genuine worry, and this week, IMHO, he gave us several. This sermon is about some of those developments—what’s concerning about them, how they’re likely to play out, and why there’s cause for hope that key political actors will be up to the challenge they pose. (Note: I taped this before Trump’s nomination of RFK Jr. as HHS secretary.)

—RW

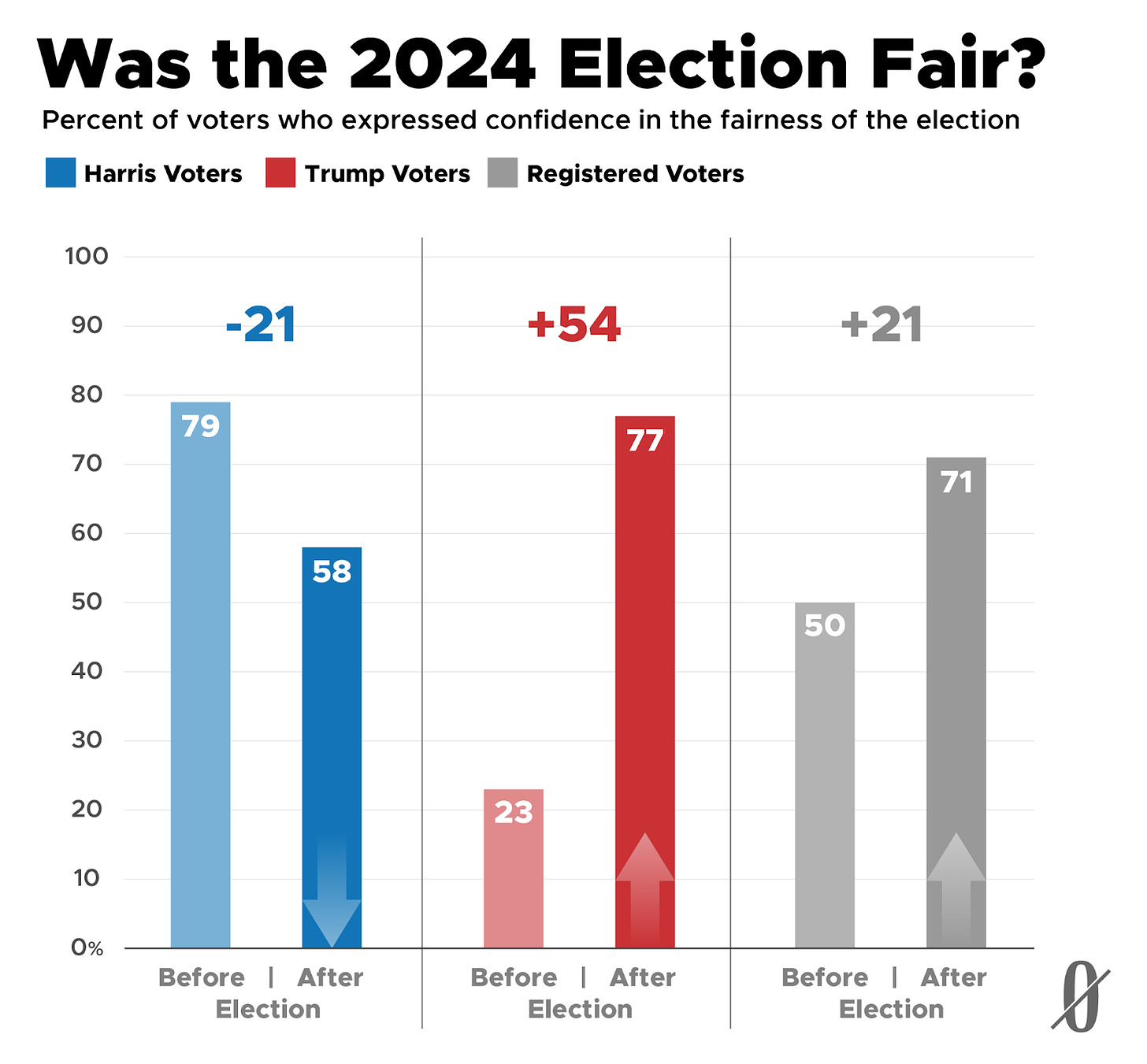

Public opinion researchers have found something many Republicans and many Democrats seem to agree on: The way to tell whether an election was fair is to see whether your side won.

Trump’s victory led both Republicans and Democrats to change their views about the fairness of the 2024 election, according to a poll from YouGov/the Economist. Prior to the election, 79 percent of Harris supporters said they had “a great deal” or “quite a bit” of confidence that the election would be conducted fairly, but only 58 percent of Harris voters said the same after Trump won. Meanwhile, only 23 percent of Trump supporters had “a great deal” or “quite a bit” of confidence before election day that the vote would proceed fairly, while after election day 77 percent of Trump voters said the contest was fair.

The Biden administration, which over the past year has developed a reputation for not following through on threats made to Israeli Prime Minister Benjamin Netanyahu, this week moved decisively to keep its reputation intact.

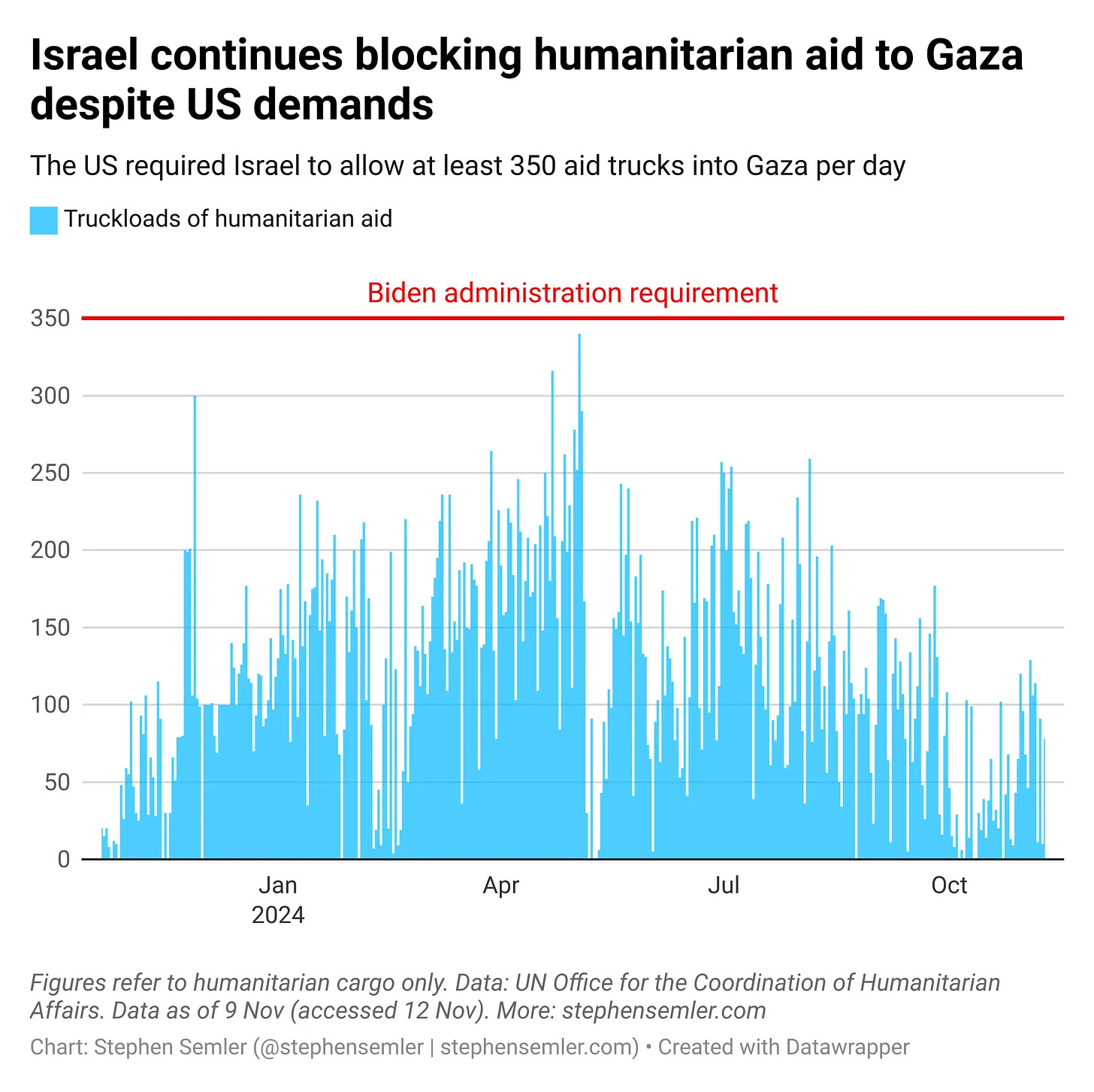

On Tuesday, Secretary of State Antony Blinken decided not to halt military assistance to Israel even though Israel had failed to meet the stated criteria for continued assistance. Blinken and Defense Secretary Lloyd Austin had on October 13 sent a letter to the Israeli government citing section 6201 of the Foreign Assistance Act, which prohibits military assistance to any government that restricts the delivery of US humanitarian aid. The letter specified that “Israel must, starting now and within 30 days” take certain “concrete measures,” such as allowing 350 aid trucks per day to enter Gaza.

A report by a coalition of aid groups found that Israel not only failed to meet these demands but also “took actions that dramatically worsened the situation on the ground, particularly in Northern Gaza.” Some State Department officials also concluded that Israel had fallen short of the stated requirements. Stephen Semler of the Center for International Policy, after appraising United Nations data, wrote that between October 13 and November 9, Israel allowed an average of only 54 aid trucks per day into Gaza, and never more than 129—far short of the 350 stipulated by Blinken and Austin.

Explaining the State Department’s decision not to suspend military aid, spokesman Vedant Patel said, “We’ve seen some steps being taken over the past 30 days.” Patel pointed to the opening of some delivery routes and the resumption of some deliveries.

One reason some observers had hoped for bolder action by the White House is that, with Biden approaching the end of his presidency and the election in the rear-view mirror, there is less reason for him to fear antagonizing the Israeli government and its American supporters. It was under comparable circumstances that President Obama, in December of 2016, defied Netanyahu by declining to veto a UN resolution that declared Israeli settlements in the West Bank and East Jerusalem a “flagrant violation” of international law.

Are large language models hitting a wall? Top AI companies are “seeing diminishing returns from their costly efforts to build newer models,” according to Bloomberg. OpenAI’s forthcoming Orion model hasn’t met the company’s hopes when it comes to writing computer code, and Google insiders say the next version of Google’s Gemini model is “not living up to internal expectations” either, Bloomberg reports.

Bloomberg says this calls into question the “scaling law,” which posits that bigger models, using more computing power and trained on more data, inevitably make leaps in performance. In particular, there is concern that, though models now under development were fed much more text and other data than the previous generation of models, the high quality data was largely exhausted by that generation. Once you’ve vacuumed up virtually the entire fund of human knowledge, the pickings get slim. And apparently “synthetic” data—data produced by the large language models themselves and then used for training—hasn’t picked up the slack.

But this wouldn’t mean a dead end for AI progress or even, necessarily, a slowing of it.