Sam Altman’s Very Good, Very Bad Week

Plus: Zoom discussion tonight. What Israelis and Palestinians have in common. Superhuman AI fighter jets. Why do autocracies beat democracies in satisfaction polls? AI-boosted ISIS. And much more!

This week OpenAI got lots of attention for unveiling a new AI so much more lifelike than its old AI that the inevitable comparisons of it to Samantha—the Scarlett-Johansson-voiced AI in the movie “Her”—didn’t do it justice. But the company also got some attention that it would have just as soon avoided.

Jan Leike, who as co-leader of OpenAI’s “superalignment” team had been trying to figure out how to keep increasingly powerful AIs from becoming increasingly dangerous, resigned—without explaining why and without the usual formulaic tribute to the company he was leaving. He just tweeted “I resigned”—didn’t add so much as a period at the end. And the other co-leader of the superalignment team, Ilya Sutskever, also resigned—though Sutskever, a co-founder of OpenAI and its chief scientist, did include the usual formulaic tribute.

The immediate speculation was that Leike and Sutskever were leaving OpenAI because they think CEO Sam Altman, caught up in the race to become the planet’s dominant AI player, isn’t paying enough attention to the risks posed by AI.

Two days later, Leike removed all doubt about his motivation. In a Twitter thread, he said that his superalignment team had been “sailing against the wind” at OpenAI. “I believe much more of our bandwidth should be spent getting ready for the next generations of models, on security, monitoring, preparedness, safety, adversarial robustness, (super)alignment, confidentiality, societal impact, and related topics.” At OpenAI, he said, “safety culture and processes have taken a backseat to shiny products.”

Notwithstanding the timing of his resignation, it’s unlikely that the Scarlett-Johansson-esque AI was the shiny product that pushed Leike over the edge. This AI—named GPT-4o, with the “o” standing for “omni”—doesn’t seem to be a big step toward the potentially planet-dominating “superintelligence” that many AI doomers worry about; or toward the slightly lower threshold of “artificial general intelligence”—which, Leike’s Twitter thread made clear, is the point in AI evolution where he thinks things get worrisome. In fact, GPT-4o’s performance on the standard tests of AI aptitude is barely better than the original GPT-4, which is now more than a year old.

But that’s what makes this AI a valuable specimen for purposes of illuminating AI risk. GPT-4o illustrates how AI can be socially disruptive—maybe even catastrophically disruptive—before it reaches the AGI threshold.

GPT-4o is “natively multimodal.” It was trained not just on text but also on voice and video. Among the implications of this are:

(1) If you have a conversation with it, it doesn’t have to translate your voice into text, run the text through a large language model, and then translate the text back into its voice. Skipping those two translations speeds things up enough to give GPT-4o’s conversations with a person a pace and rhythm strikingly like a conversation between two people.

(2) It is responsive to intonation, to the nonverbal cues that are carried by tone of voice and disappear when voice is turned into text. It can tell the difference between an angry, sarcastic “Sure, I’d love that!” and a cheerful “Sure, I’d love that!” It can also modulate its own tone as the occasion warrants.

(3) It has vision. Via your smartphone or webcam, it can survey you or your environs and answer questions about either. There were already versions of this functionality—on some smartphones, and on Meta’s camera-equipped Ray-Ban glasses—but native multimodality, along with other features of GPT-4o, carry the capability to a new level.

If you want to see how various elements of native multimodality combine to give AI new power, watch this demo video in which Sal Khan, founder of the Khan Academy, gets GPT-4o to tutor his son in geometry.

Tutoring is a good example of the dual-edged nature of AI. Imagine a world in which all children can have a personal tutor! Imagine a world in which lots of people who now make a living as personal tutors—or even as classroom teachers—suddenly don’t have jobs! AI will bring great things, but many of the great things have a flip side that could be socially disruptive in ways that are hard to gauge in advance.

We can eventually adapt to the disruptions. (If tutors can’t be retrained, who can?) But if enough disruptions hit at roughly the same time, that could be a large scale problem—all the more so given that many nations are already a bit out of equilibrium.

A few more two-edged swords that come into sharper view once you see a natively multimodal AI in action:

1. Before long, lots of people will have an AI as a friend or therapist. That’s good—there are lots of lonely people, and lots of people whose mental health could use improving (like, at last check, all of us). But what happens when these friends and therapists are provided by companies that—like social media companies—aim to maximize your engagement with their product? In other words: aim to get you addicted to their bots. And how insidious could advertising get when delivered by subtly persuasive bots? And what if someone with aspirations to become a cult leader starts out by founding a “therapy” AI startup that garners a million customers with exactly the kind of psychological vulnerabilities that make cults attractive?

2. And speaking of maximizing engagement: For all the mockery directed at Mark Zuckerberg two years ago for betting so heavily on the coming “metaverse”—on deeply immersive virtual reality—the metaverse is coming, sooner or later. And it will be populated by AI-driven characters that give it increasingly lifelike drama. (GPT-4o can already serve as the voice for a realistic-looking two-dimensional fake person; a third dimension will no doubt arrive.) This could bring wonderfully enriching educational experiences, and various kinds of wholesome fun. It could also bring lots of other things, like complexly gamified pornography (or just complexly gamified romance) that, among other side effects, further reduces the number of adolescents who pursue real romance with real humans.

3. Listening to annoying music while waiting on the phone for someone to give you customer support will within a couple of years be a thing of the past; a helpful voice will pipe up as soon as you dial. But making a living by giving people customer support on the phone will also become a thing of the past.

Not all of these things will wind up being big challenges, and we can eventually adapt to the ones that do; our species is surprisingly good at coming to terms with change. But in the meanwhile things could get pretty weird pretty fast.

I haven’t nearly exhausted the list of challenging novelties that may be created by increasingly lifelike multimodal AIs. And I haven’t listed the various challenges that will be posed by not-so-lifelike, not-so-multimodal AIs. (For example: AIs influencing politics by exercising their documented persuasive skills via text exchanges on social media; AIs displacing workers by doing things like writing and computer programming; AIs being deployed by bad actors to do new kinds of viral hacking.)

Whether you’re worried about the long term threat of superintelligent rogue AIs or the more mundane and nearer term challenges of the kind I’ve mentioned, one moral of the story stays the same: It would be nice if the pace of AI evolution didn’t reach breakneck speed. And if you doubt that things are moving pretty fast, check out the things unveiled by Google the day after OpenAI’s big reveal. It looks like everything in GPT-4o will before too long be available from Google, along with lots of other stuff.

Slowing down AI is a big political ask, especially amid the arms-race mentality fostered by America’s growing Chinaphobic hysteria. So I’m not asking for it! What I would ask is that people who profess to be concerned about the many challenges posed by AI (1) not argue against proposed regulations solely on grounds that they “stifle innovation”; (2) not argue that we need whole new multi-billion-dollar power-plant-building initiatives just so we can train the next generation of AIs ASAP.

I was heartened last week when Sam Altman, in an online conversation hosted by the Brookings Institution, said, “The thing I'm most worried about right now is just the speed and magnitude of the socioeconomic impact that this may have.” Given that Altman was already on record as being concerned about the sci fi doom scenario—a human-squashing superintelligent AI—this means he has a broad-spectrum conception of AI risk.

So presumably he’s on board for slowing things down a bit?

Guess again. In that same conversation he expressed concern that the “energy bottleneck” could keep the world from firing up as many AI chips as he thinks the world should fire up. The challenge of powering all the data centers we must build “seems super hard right now.”

These sentiments lend credence to the Wall Street Journal’s report a few months ago that Altman was trying to drum up trillions of dollars of investment to expand the planet’s chipmaking and energy producing capacity. These sentiments also help explain why Jan Leike—and maybe Ilya Sutskever as well—are no longer at OpenAI. —RW

PS: We just posted my conversation with AI doomer—and “Pause AI” activist—Liron Shapira. Liron and I discussed the OpenAI resignations and then delved into the plausibility of the more extreme AI doom scenarios. You can watch on YouTube or listen on the Nonzero podcast feed.

A new global opinion survey raises a question about democracies: Why do so many people who live in them think they’re not working well?

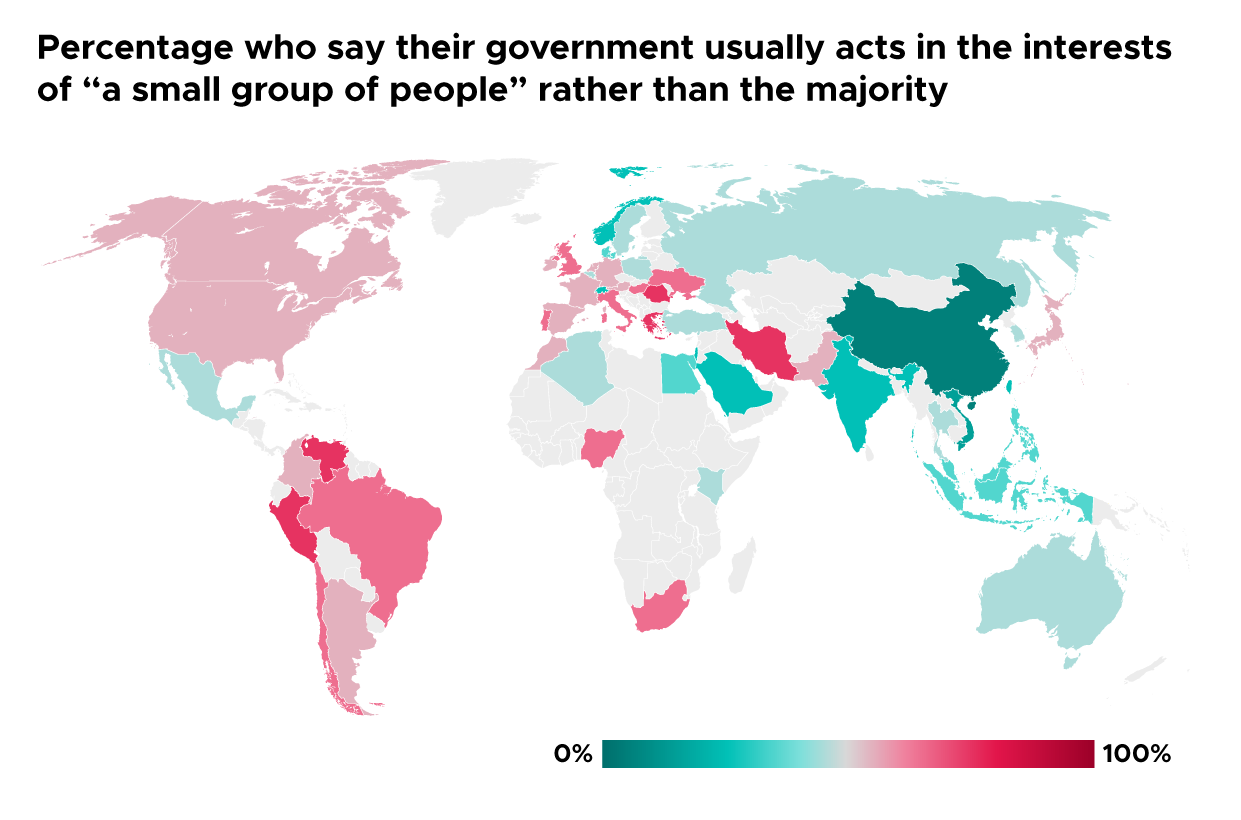

People in the US and western Europe are more likely than people in China, Russia, and other non-western nations to say their government serves a “small group of people in my country” rather than the majority, according to this year’s report from the non-profit Alliance of Democracies.

So what does this mean? Why is the right side of the map below so green, with the left side tilting toward red? We don’t know, so we’ll leave it for NZN readers to hash this out in the comments section.

Is it true that Israelis are coldly indifferent to the suffering being inflicted on civilians in Gaza—and may even welcome it as payback for October 7? And is it true that Gazans not only countenanced but celebrated the mass murder of Israeli civilians on October 7?

One of the few bits of good news to have emerged from this horrible conflict is that the answer to both questions is: Not as true as you might think. On both sides, it turns out, there is a kind of socially-imposed perceptual filter that keeps many people from having to confront the worst things that have been done in their name.

The Wall Street Journal reported this week that, “On Israeli television, there is virtually no footage of dead Palestinians and only some scenes of the destruction” in Gaza. Instead, Israeli TV—a key source of information for three-quarters of Israelis—covers battlefield developments, Israeli military casualties, and personal stories of Israelis affected by October 7 atrocities.

Even when scrolling the news on their smartphones, many Israelis rarely encounter images of death and destruction in Gaza, the Journal writes. In an April survey, nearly two-thirds of Israeli Jews reported having seen few such images or none at all since the war began.

That’s very different from what people in the Arab world are seeing. Al Jazeera, the region’s main broadcaster, routinely shows images of suffering in Gaza but has shown little footage from October 7. A survey conducted in Gaza and the West Bank in March found that 80 percent of the Palestinians polled still hadn’t seen videos of the October 7th attacks; 60 percent said the media they consumed didn’t show the videos, and 20 percent said they didn’t want to watch them. More than 90 percent of Palestinians said they didn’t believe Hamas had committed atrocities against Israeli civilians during the attacks.

Maybe calling all this perceptual filtering “socially imposed” is too generous. The fact is that most people would rather not see the worst things being done in their name. In that sense, Al Jazeera and Israeli media are just giving people what they want—and not giving them what they don’t want.

That’s something social media algorithms are also good at. Which helps explain why, though social media can in principle help people circumvent mass media and get the whole truth about who their soldiers are killing, that tends not to happen. Social media algorithms are good at reinforcing our stories about our tribes, and one of the most popular such stories is that the other side wantonly kills innocents but our side doesn’t.

Four AI Updates:

The US and China had their first bilateral discussions on AI safety this week. Officials from the two nations, meeting in Geneva, agreed that AI carries risks which call for international cooperation and that further dialogue would be a good idea. But Chinese officials complained about “restrictions and pressures” from the US, which has organized an international coalition to keep high-performance microchips and other AI-related technologies out of China. And American officials, according to State Department spokesperson Adrienne Watson, “raised concerns over the misuse of AI” by China, though she didn’t cite any examples of such misuse.

A bipartisan group of US senators, including majority leader Chuck Schumer, called for $32 billion in federal spending on AI-related things. Schumer said that if China is "going to invest $50 billion, and we're going to invest nothing, they'll inevitably get ahead of us. So that's why even these investments are so important."

AI pilots are “roughly even” with human pilots, and on track to surpass them, when it comes to flying F-16 fighter jets, said Air Force Secretary Frank Kendall at a recent conference. He should know. Earlier this month, Kendall took a one-hour flight in the cockpit of an AI-piloted F-16. The modified F-16 Fighting Falcon reached speeds above 550 mph and executed tactical maneuvers in a mock dogfight with a human-piloted aircraft—all without Kendall or the safety pilot touching the controls. The initial use envisioned for autonomous fighter jets is as wingmen that support a jet piloted by a human who makes all the life-or-death decisions.

Apparently some very bad actors have started using AI. On social media, supporters of the Islamic State (aka ISIS) are posting AI-generated news broadcasts complete with AI-generated news anchors who spread the group’s extremist views, writes Pranshu Verma of the Washington Post. “The videos offer some of the earliest signs of AI helping terrorist groups quickly disseminate propaganda and recruit members… and have even sparked an internal debate over the use of the technology under Islamic law.”

Liberal journalists are living in "a lefty social bubble" when it comes to the war in Gaza and, as a result, are overestimating the political costs for President Biden of supporting Israel, writes statistics guru Nate Silver in his newsletter.

Silver concedes that the war in Gaza is bad for Biden, since it’s turned some progressives against him. But by Silver's calculations, only “0.5 percent of the American electorate are 2020 Biden voters who say they’ll withdraw their vote from Biden because he’s too far to their right on Israel.” While shifting to the left on Israel-Palestine could help Biden win back some of these disproportionately young voters, staying the course helps him keep the support of older voters who are pro-Israel—and older voters are more likely to turn out on election day. And, as Silver notes, even young people generally don’t rank Gaza as a high-priority issue.

Some have pointed to one omission in this kind of calculus: Though younger voters who are alienated by Biden’s position on Gaza may be few in number, they’re more likely to serve as campaign volunteers—knocking on doors, phone-banking, and in other ways being force multipliers. True, most campus protesters probably wouldn’t have been Biden volunteers in any event—but their protests may have made other young progressives reluctant to get on the Biden train.

On the other hand, another factor left out of Silver’s analysis works in the opposite direction: Pro-Israel Democrats provide not just votes, but, in some cases, big campaign donations—much bigger, in the aggregate, than donations from pro-Palestinian Democrats.

So maybe Silver’s model, after acquiring these two bits of nuance, leads to the same conclusion it led to originally.

The parliament of Georgia incurred much western criticism this week by passing a bill that would require NGOs and media outlets that get more than 20 percent of their funding from foreign sources to register as foreign agents. The bill, which Georgia’s president hasn’t signed into law, was passed after a group of American legislators—led by the chairman of the House Foreign Affairs Committee—threatened to levy US sanctions against Georgia in response. In a letter to Georgia’s prime minister, they wrote, “We draw your attention to the proposed bill’s similarities to a law against ‘foreign agents’ enacted in Russia by Vladimir Putin in 2012, which was also justified under the guise of ‘transparency’.”

One thing they didn’t draw the prime minister’s attention to is the proposed bill’s similarities to an NGO “transparency” law enacted in Israel in 2016. The Guardian reported at the time, “Critics say the legislation will target about two dozen leftwing groups that campaign for Palestinian rights while excluding rightwing pro-settlement NGOs, who will not be required to reveal their often opaque sources of foreign funding.”

This kind of American inconsistency—punishing some countries for doing things while tolerating those things in other countries—is more apparent to people beyond America’s borders than to people within them. And that helps explain why America’s ongoing campaign to promote liberal democracy (except in the various countries, including several in the Middle East, where America doesn’t seem interested in promoting liberal democracy) is viewed cynically in many countries. Which in turn helps explain why western-backed democracy-promoting NGOs in places like Ukraine and Georgia are viewed by some in those countries as nefarious foreign agents. Which in turn helps explain… this bill getting passed by Georgia’s parliament.

Helen Andrews of The American Conservative says the controversial bill is sensible, noting that Georgia has thousands of foreign-funded NGOs that engage in partisan political activities. “Foreign interference in elections has been a sore spot in our own politics recently,” Andrews writes. “We can hardly begrudge the Georgians their similar concerns.”

This week brought some clarity about why Niger demanded this Spring that all 1,100 US troops stationed in the country be withdrawn: Niger’s leaders didn’t like being scolded by US diplomats. Shortly before the military partnership fell apart, the State Department’s top official for Africa, Molly Phee, had discouraged Niger from strengthening ties with Russia and Iran and threatened sanctions if it sold uranium to the latter. In an interview with the Washington Post, Niger’s prime minister recounted a meeting with Phee:

“When she finished, I said, ‘Madame, I am going to summarize in two points what you have said. First, you have come here to threaten us in our country. That is unacceptable. And you have come here to tell us with whom we can have relationships, which is also unacceptable. And you have done it all with a condescending tone and a lack of respect.’”

The perceived disrespect wasn’t the only issue. After a military coup in Niger last year, the US froze security support and paused counter-terrorism operations but kept its troops in place, to the frustration of the new junta government. Niger then turned to Russia for military training and aid, leading to the awkward situation of Russian and US troops being stationed on the same air base. Last month, the Pentagon announced it would begin withdrawing troops soon.

—By Robert Wright and Andrew Day

Earthling banner art created by Clark McGillis. Democracy map adapted by Clark McGillis.

ZOOM AMA!! Link below to today’s 6 pm (US Eastern Time) discussion!