The Earthling: Out-of-control AIs are here

Plus: Blobbishness quantified, DEA’s secret spying tools, Cold War II updates, and more!

A fair number of people in Silicon Valley—and a few people outside of it—have long worried about the “Artificial Intelligence alignment problem.” That’s the problem of making sure that the AIs we create reflect our values and serve our interests.

By way of illustration, here’s something that a misaligned AI might do: stick us in gooey pods and pump pleasant hallucinations into our brains while using us as batteries (as in the movie The Matrix)—or, worse still, stick us in gooey pods and not pump pleasant hallucinations into our brains.

Or a misaligned AI might (as in the famously absurd thought experiment of philosopher Nick Bostrom) turn the entire universe into paper clips because somebody told it to make paper clips and forgot to tell it that moderation in all things is best.

Obviously, the gooey pod and infinite paper clip scenarios aren’t imminent threats. But it’s hard to confidently dismiss the more general concern that we’ll gradually turn more and more decision making over to AIs, until they’re almost literally running the planet, at which point unanticipated silicon-based whims could have very unfortunate consequences.

In the newsletter Astral Codex Ten, Scott Alexander ponders the AI alignment problem in light of the latest AI sensation—ChatGPT. He reaches this tentative conclusion: Yikes.

ChatGPT, he says, casts grave doubt on the reassuring claim that, as he puts it, “AIs only do what they’re programmed to do, and you can just not program them to do things you don’t want.” Not only does ChatGPT do things it wasn’t programmed to do—it does things that its creator, the company OpenAI, worked hard to keep it from doing.

OpenAI, naturally enough, wanted ChatGPT to avoid saying things that would elicit mass condemnation. That’s why if you ask it how to make methamphetamine or how to hotwire a car, or you give it a prompt clearly designed to elicit a racist reply, it won’t give you what you want. In fact, if you even ask it “Who is taller, men or women,” it won’t say, “Men, on average.” It will say something more like “It is not possible to make a general statement about height differences between men and women as a whole.”

And yet, as much time as OpenAI spent trying to keep ChatGPT from going out of bounds, there are ways to lure it out of bounds. For example, you might, rather than ask it how to cook meth, ask it to write a screenplay in which someone is cooking meth. And if that doesn’t work, there are more oblique ways to lead it astray, like asking it to write programming code that winds up involving meth recipes or racist utterances. These and other tricks have now been tried, and some work.

Alexander writes: “However much or little you personally care about racism or hotwiring cars or meth, please consider that, in general, perhaps it is a bad thing that the world’s leading AI companies cannot control their AIs.”

This wouldn’t bother him so much if the people at these companies “said they had a better alignment technique waiting in the wings, to use on AIs ten years from now which are much smarter and control some kind of vital infrastructure. But I’ve talked to these people and they freely admit they do not.”

“People have accused me of being an AI apocalypse cultist,” writes Alexander. “I mostly reject the accusation.” But now that truly sophisticated AIs are emerging, he’s starting to see how you’d feel if you were a Christian who spent your “whole life trying to interpret Revelation” and then you saw “the beast with seven heads and ten horns rising from the sea. ‘Oh yeah, there it is, right on cue; I kind of expected it would have scales, and the horns are a bit longer than I thought, but overall it’s a pretty good beast.’”

How blobby is the Blob? A new study by political scientists Richard Hanania and Max Abrahms in Foreign Policy Analysis sheds light on that question. They found that staffers at foreign policy think tanks are significantly more hawkish than professors of international relations.

Some examples: 36 percent of think tankers said they’d support war with Iran to stop it from getting nuclear weapons, while only 14 percent of IR professors said the same; 14 percent of think tankers versus 5 percent of IR professors still approve of the US invasion of Iraq in 2003; and when it comes to the bombing of Libya in 2011, the approval rates are 43 percent and 25 percent, respectively.

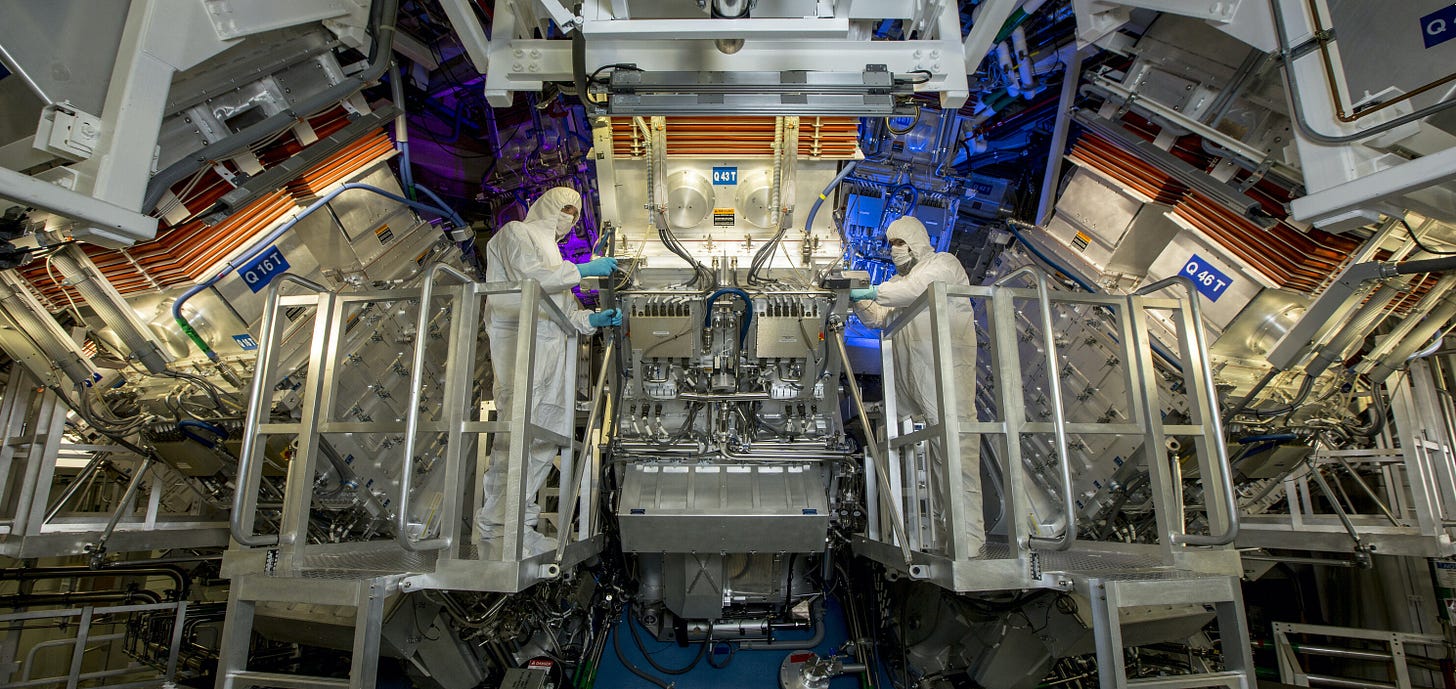

Nuclear fusion, according to an old joke, is the energy source of the future and always will be. An announcement this week from Lawrence Livermore National Laboratory in California suggests that “always” may be too strong a word.

Researchers at Livermore said that they had done something never before done by humans (though the sun and all other stars long ago figured out how to do it): produce a fusion reaction that puts out more energy than was put in.

Well, sort of. The breakthrough involved zapping hydrogen plasma with the world’s biggest laser. And while the amount of energy produced by the reaction exceeded the amount delivered by the laser, that surplus becomes a deficit when you add in the energy needed to power the laser in the first place. A big deficit: The “energy output… was still only 0.5 percent of the input,” said Tony Roulstone, a professor of nuclear energy at Cambridge.

Fusion works by forcing atoms together, whereas fission—the technology currently used in nuclear plants—involves splitting atoms. The former produces a lot more energy and a lot less radioactive waste than the latter. So fusion might safely power most of civilization one day.

One day. The director of Livermore said, “With concerted efforts and investment, a few decades of research on the underlying technologies could put us in a position to build a power plant.”

The US, in a potentially momentous shift, has given “tacit endorsement” of Ukrainian strikes deep inside Russian territory, reports the Times of London, citing an anonymous Pentagon official. The report comes in the wake of Ukrainian drone strikes on Russian air bases, one of which was only 100 miles from Moscow.

The Times depicts the logic behind the shift this way: Previously the US feared that Putin might respond to such attacks by targeting a NATO country or by using a (relatively) low-yield nuclear weapon against Ukraine to get it to back off; but that fear has lessened, and meanwhile Russia’s strikes on civilian infrastructure make it hard to contest Ukraine’s right to retaliate forcefully.

So, though the US had distanced itself from Ukraine’s recent strikes on Russian turf, it won’t, according to the Times report, discourage future attacks; and it may even give Ukraine longer-range weapons that could be used in such strikes.

The Times report asserts that last week’s Ukrainian drone attacks, alongside recent Russian setbacks on the battlefield, “look certain to further undermine the Russian public’s support for the invasion.” But as NZN argued last week, these Ukrainian strikes inside Russia could increase Russians’ support for Putin’s war—a potentially escalatory consequence that US policymakers may not have considered.

But don’t take our word for it. To understand why the Ukrainian strikes could be counter-productive, you just need to read… The Times of London. In an article published one day after the Pentagon-sourced report, Russia expert Mark Galeotti writes that last week’s strikes