The integration of America’s artificial intelligence industry into the military-industrial complex proceeds apace. The latest evidence comes in the form of two things that happened the week of the presidential election and so got little attention. One involved Meta, and the other involved Anthropic, one of the “big three” makers of proprietary large language models (along with OpenAI and Google).

The development involving Anthropic was particularly important, because its back story—which, like the story itself, didn’t get much attention—is testament to the formidable support in Silicon Valley for a confrontational approach to China.

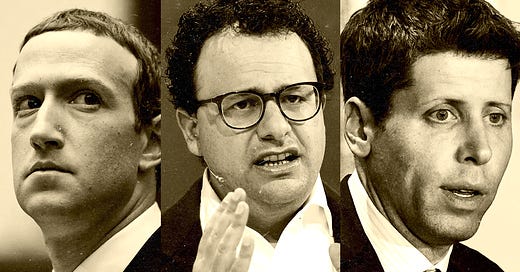

Two days after the election, Anthropic announced that it will partner with Silicon Valley defense contractor Palantir to help US intelligence and defense agencies make use of Anthropic’s Claude family of LLMs. Three days earlier, Meta had said it was making Llama, its large language model, “available to US government agencies, including those that are working on defense and national security applications, and private sector partners supporting their work.” Those partners will include Lockheed-Martin and, again, Palantir (whose eccentric CEO was profiled by NZN’s Connor Echols in September).

In undertaking these military collaborations, both Meta and Anthropic crossed a line they had never crossed before.

In the case of Meta, the move had a fairly obvious explanation. Meta’s announcement came on the heels of reports that researchers linked to the Chinese military are using Llama. That news was ammunition for those who had warned that open-source models such as Llama could be exploited by China, and had argued that the US should therefore require the most powerful LLMs to be closed source. Such a regulation would undermine Meta CEO Mark Zuckerberg’s AI strategy, so it makes sense that Meta would counter this narrative by casting its open source AI as a US military asset.

Anthropic’s move had less obvious motivation— and even seemed, superficially, out of character. Though there’s a long tradition of tech companies doing business with the Pentagon—since, after all, profit is their goal—Anthropic had always seemed less profit-driven than the average company. It’s a “public benefit” corporation that was founded by people who left OpenAI because they felt that Sam Altman, OpenAI’s boundlessly ambitious CEO, wasn’t taking AI safety seriously enough. Whereas OpenAI opposed a recent AI Safety bill (SB 1047) that was vetoed by California Governor Gavin Newsom, Anthropic didn’t. Surely, if any major AI company was going to resist the allure of the military industrial complex it would be Anthropic?

Guess again.

Anthropic’s embrace of the Pentagon shouldn’t have surprised anyone who had been closely reading the tea leaves. Co-founder and CEO Dario Amodei, in a few largely overlooked paragraphs of an AI manifesto he posted in October, had shown himself to be no lefty peacenik. Most discussion of the 15,000-word essay (including Amodei’s epic recent conversation with podcaster Lex Fridman) had emphasized its profuse extolling of the possible benefits of AI and had ignored something else in it:

Amodei, in his essay, embraces the “democracy versus autocracy” framing of US foreign policy popular in the Biden administration and elsewhere in the Blob. And, accordingly, he turns out to be something of a China hawk. He considers it imperative that a coalition of the world’s democracies “gain a clear advantage” in AI by “securing its supply chain, scaling quickly, and blocking or delaying adversaries’ access to key resources like chips and semiconductor equipment.” That last part means he supports the Biden administration’s “chip war” against China—which, as previously noted in NZN, straightforwardly increases the incentive (or, strictly speaking, decreases the disincentive) for China to invade Taiwan.

Once this coalition of democracies has used AI to “achieve robust military superiority,” Amodei writes, it should selectively share AI technology with nations that agree to support “the coalition’s strategy to promote democracy.”

In this way, “the coalition would aim to gain the support of more and more of the world, isolating our worst adversaries and eventually putting them in a position where they are better off taking the same bargain as the rest of the world: give up competing with democracies in order to receive all the benefits and not fight a superior foe.” So this is the ultimatum China would face at this great culminating crossroads in world history.

It’s not clear what it would mean for China to “give up competing with democracies,” since Amodei never tells us which forms of China’s current competition with democracies he finds unacceptable. In fact, he never even spells out the senses in which China is “competing with democracies” per se (as opposed to just doing the things rising powers do, like project military power regionally, expand economic influence globally, and throw its weight around within international institutions). So this critical juncture in world history is a bit fuzzy. But it’s clear that Amodei expects AI to be the tip of the spear of America’s global projection of power. And it’s clear that he wants America to use that power assertively.

Hints that Anthropic would ultimately favor a confrontational approach to China existed long before Amodei’s manifesto. One of the company’s seed funders was former Google CEO Eric Schmidt, an influential China hawk who helped mobilize support for Biden’s chip war against China.

To emphasize Schmidt’s relevance to Anthropic’s emerging ideology isn’t to cast aspersions on Amodei. The idea here isn’t that Amodei was once a China dove but sold his soul in exchange for funding that helped get Anthropic off the ground. Indeed, people I know who know Amodei say he is genuinely public spirited and isn’t driven by the pursuit of power in the way that Sam Altman seems to be.

Rather, the idea is that someone as intensely ideological as Eric Schmidt—and as deeply immersed in the military industrial complex as Schmidt—would be unlikely to help launch an AI company run by a peacenik, or even by a foreign policy realist who favors nurturing harmonious relations with China. It seems highly likely that Schmidt got a sense for Amodei’s world view before participating in Anthropic’s Series A funding.

The AI industry’s enlistment in the Cold War against China (and in a possible hot war) seems disconcertingly ironic to those of us who believe that the AI revolution could go badly awry if China and the US don’t cooperate to keep that from happening. And the industry’s role in deepening the Cold War goes beyond its growing collaboration with the Pentagon. Lots of AI industry lobbying pitches—for subsidized power plants, loose regulation, whatever—pack more of a punch if depicted as a way to counter the Chinese menace. So the entire industry—an industry that’s building machines that will increasingly shape our thinking—has an interest in amplifying our fear of China.

Only last week, OpenAI called for massive government investment in AI infrastructure to compete with China. The language of the proposal would seem to put Sam Altman and Dario Amodei shoulder-to-shoulder in the global struggle against tyranny. The US, says OpenAI, faces “a national security imperative to protect our nation and our allies against a surging China by offering an AI shaped by democratic values.” To that end, we should assemble a “global network of US allies and partners.”

As for collaboration between OpenAI and the Pentagon: Last month the Intercept reported the Pentagon’s “first confirmed OpenAI purchase for war-fighting forces.” Ten months earlier, OpenAI had quietly removed a passage from its use policy that had prohibited military applications of its technology. Meta, for its part, only this month got around to “clarifying” that the ban on military application found in its LLM terms of use weren’t meant to apply to the American military. Here, as with so many things, Sam Altman was ahead of the curve.

Header image by Clark McGillis.

I think I need to understand two things better. 1. What would stop a authoritarian power (be it China, Russia, North Korea, Iran or some other country) to work on AI in secret even if they did agree to some AI limits or inspection via a treaty process? Have we been here before with the nuclear bomb? 2. I think I need a refresh on how things would go for the "free world" if the "authoritarian world" won an AI war so they indeed could dominate the world with their terms? Which system having the high ground be the better option? Seems like that would not go too well for freedom people. Then maybe I am still too stuck in the "us vs them" world view. This lack of clarity in spite of reading and watching Bob since before our at least arguably authoritarian President was with us the first time in 2016. I am just glad I am not the one who has to make the decisions on how to proceed in these matters. Sigh.

I'm not sure to what extent the escalating tensions with China are being driven by defense and China Hawks, as much as they are being influenced by corporate interests. It seems that the dominance of the American tech industry is under threat, essentially being outcompeted, so these companies are taking steps to hinder the competition. Eric Schmidt is an interesting case in point, given his pursuit of Cypriot citizenship (https://www.vox.com/recode/2020/11/9/21547055/eric-schmidt-google-citizen-cyprus-european-union) and his recent advice to students to steal TikTok's intellectual property (https://finance.yahoo.com/news/ex-google-ceo-schmidt-advised-232209286.html). Something about it feels a bit disingenuous.