The AI Wave Accelerates

Plus: Elon Nazi Rorschach test, Trump’s busy first week, Russian “sabotage” debunked, non-depressing climate news, and more!

Save the date: I’ll be doing a Q&A Zoom call with NonZero members (sometimes crassly referred to as “paid subscribers”) on Thursday February 6 at 8 pm US Eastern Time. The main topic will be the first three weeks of Trump II, but questions on other topics are welcome, too. The Zoom link can be found at the bottom of this newsletter, behind the paywall. —Bob

—OpenAI unveiled Operator, an “agentic” AI that can take control of your computer and perform simple tasks, such as reserving a table at a restaurant. For now Operator is available only at OpenAI’s $200-per-month “Pro” tier, but, judging by how the live demo went, maybe that’s just as well: After filling an online shopping basket with items on a shopping list, Operator failed to execute the human operator’s instruction to buy them (10:45), and when told to go to StubHub to buy tickets, it deemed that site off limits (12:25). (See below for more about AI—including reasons to believe that AIs will soon get better at autonomously pursuing assigned goals.)

—President Trump terminated Secret Service protection for John Bolton, his former national security adviser, who in 2022 was identified as the target of an alleged Iranian assassination plot. During a press conference, Trump said Bolton was a “warmonger” who had convinced George W. Bush “to blow up the Middle East.” (For more on Trump’s eventful first week back in the White House, see below.)

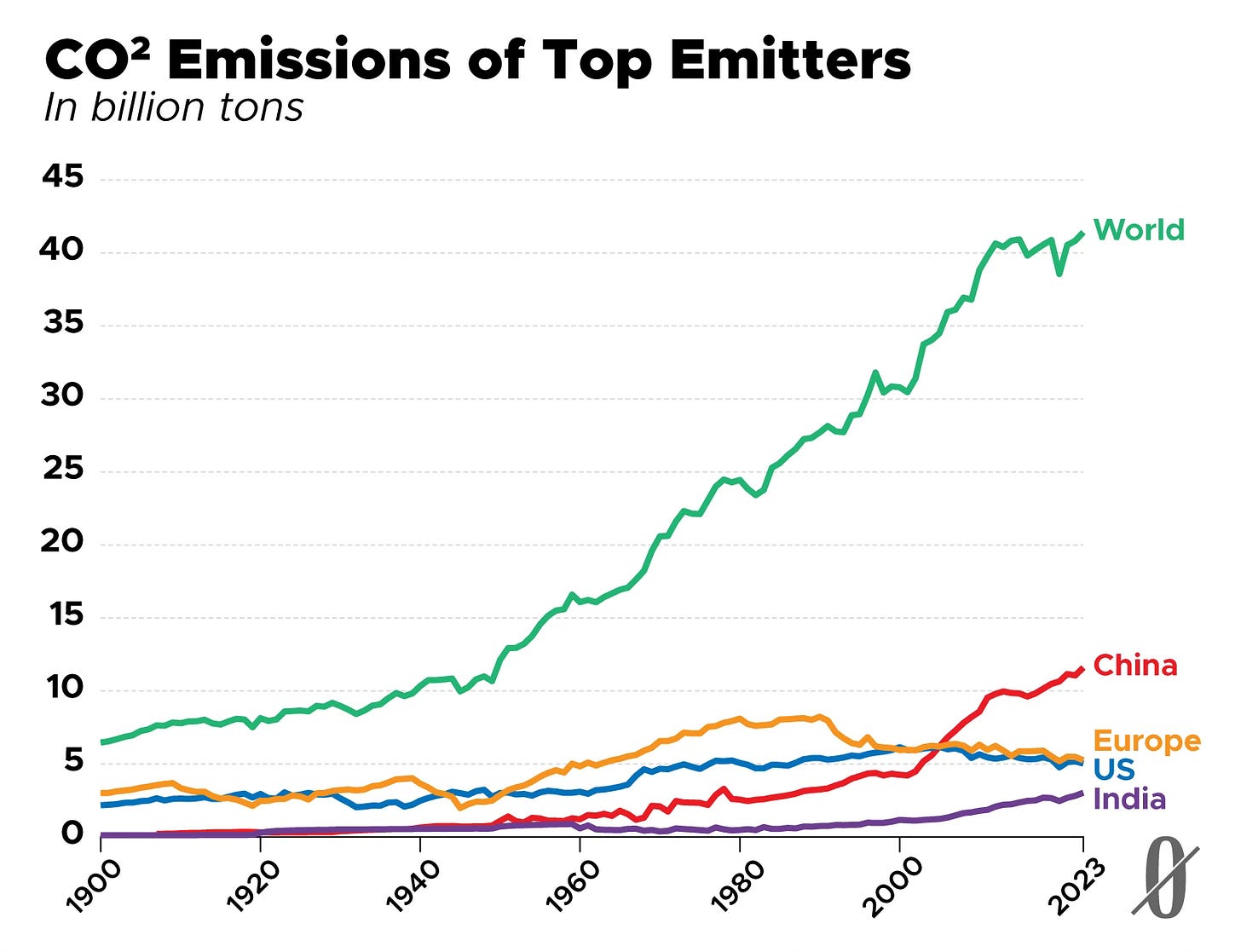

—In non-depressing climate-change news: Trees can absorb more carbon and store it for longer than previously thought, writes journalist Katherine Latham in BBC. Scientists infused an English woodland with CO2 and found that trees responded by growing extra bark to hold much of the additional greenhouse gas.

—Men have gotten taller and heavier at twice the rate of women over the past century, writes Ian Sample of the Guardian. The authors of a recent international study speculate that one driver of the trend is women’s preference for tall, formidable men as mates.

—Amid a boom in satellite launches, an international group of scientists has called on the United Nations to add protecting Earth’s orbit to the UN’s list of 17 sustainable development goals. In Scientific American, space environmentalist Moriba Jah advocates a “circular economy” approach to the “space junk” problem—that is, finding ways to recycle space objects in a way that reduces the number of them in orbit.

Screenwriter Paul Schrader—famous for the scripts of Taxi Driver, Raging Bull, and other lauded films—recently played around with ChatGPT and found himself discomposed. He called the interaction “an existential moment” that brought to mind the defeat of chess master Garry Kasparov by IBM’s Deep Blue in 1997. “I just sent chatgpt a script I'd written some years ago and asked for improvements,” Schrader wrote on Facebook. “In five seconds it responded with notes as good or better than I've ever received [from] a film executive.”

As Schrader’s peers were quick to note, surpassing Hollywood executives in discernment is at best a minor technological advance. But it turns out there was more to the story: “I asked it for Paul Schrader script ideas,” Schrader wrote. “I[t] had better ideas than mine.”

This week brought other signs, as well, that the AI revolution may unfold faster and more momentously than many people had been assuming. A Chinese company unveiled a new large language model, DeepSeek-R1, which elicited reactions among AI watchers that, depending on their feelings about China, ranged from “Wow!” to “Oh no!”

R1 is one of the new breed of “reasoning” LLMs, engineered to engage in extended “chain-of-thought” reflection. This reflection lends particular strength to math and science skills but can also aid common sense reasoning, complex planning, and the autonomous pursuit of assigned goals. The first such model—OpenAI o1—was unveiled in September, and R1 is comparable to it in performance and much cheaper to use. What’s more, it’s an open-source (or, technically, “open-weights”) model, which means it will spur progress by other AI researchers more powerfully than OpenAI’s proprietary models do.

Seems like only yesterday people were saying AI progress would soon slow down because the “scaling laws” were losing force; the addition of more computing power and more data during the training of large language models was bringing diminishing returns. But the rapid evolution of the new “reasoning” LLMs (which demand much more computing power than conventional LLMs while being used, but not while being trained) has over the past few months marginalized that concern. OpenAI has already announced but not released a new reasoning model, o3, that is a clear improvement over o1. And Google’s reasoning model, introduced in December, has shown a sharp increase in math and science skills over the course of only a month.

Meanwhile, as the technology advances, AI potentates are working to ensure that there are enough power plants and microchip clusters to ensure rapid rollout. This week OpenAI CEO Sam Altman joined President Trump at the White House to unveil a big infrastructure project that will be funded via the venture capital firm SoftBank.

The vibes were entirely upbeat. When Trump said that building and maintaining the infrastructure will create lots of jobs, Altman refrained from noting that, as he has acknowledged on other occasions, untold numbers of American workers will be displaced by AI. And he didn’t repeat his observation from two years ago that, though our AI future could be filled with wonders, the worst-case AI scenario is “lights out for all of us.”

Altman is right about the wonders—including the coming medical advances he extolled at the White House. Still:

There is a growing sense among many AI researchers that artificial general intelligence (AGI)—a threshold different people define differently, but that will by any definition have huge social impact for good and ill—is going to arrive sooner than they thought even six months ago. Maybe as soon as next year. And many in the AI community—not just hard-core doomers—are worried about this impact and the fact that so few people are talking about it. (These worriers include AI safety researchers who have recently left OpenAI.)

Altman isn’t the only executive at a major AI company who seems to be saying less and less about the downsides of AI. But these execs are going to have trouble putting their past worries behind them, because there’s an army of online doomers who enjoy dredging them up. This week, posting on X, Shane Legg, chief AGI scientist at, and co-founder of, Google’s DeepMind, said he sees a “50 percent chance of AGI in the next 3 years.” That wasn’t news, as he’d said roughly as much before, but it did inspire fresh reminders of an interview Legg gave back in 2011. Asked at the site LessWrong about the probability of human extinction ensuing within a year or so of the advent of AGI, he answered, “I don't know. Maybe 5 percent, maybe 50 percent. I don’t think anybody has a good estimate of this.”

Unless you’ve been doing an extraordinary job of avoiding media and social media during week one of Trump II, you’re aware of an arm gesture made by billionaire Elon Musk during a post-inauguration celebration. Many in Blue America said Musk had trotted out a fascist salute. Many in Red America said he hadn’t. In hopes of resolving this most recent of national rifts, we’ve decided to poll our wise readers. How are we to view the Musk Arm Incident of January 20?

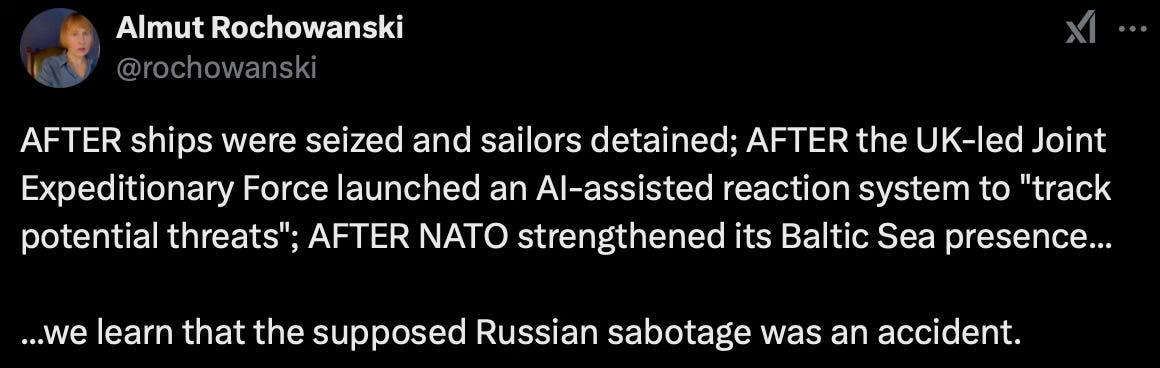

There’s been an unusual amount of undersea cable-slicing in the news lately, often accompanied by allegations of Russian or Chinese sabotage. This week brought two developments on that front, and by some lights (ours, for example) they offer lessons about the geopolitical value of two virtues: epistemic humility and cognitive empathy (translations: not jumping to conclusions; and looking at things from other people’s points of view).

First, the Washington Post reported that Western intelligence officials now believe that maritime accidents, not Russian sabotage, were behind recent instances of damage to energy and internet lines in the Baltic Sea. Before this consensus emerged, western nations had mobilized to counter the new Russian perfidy, as Almut Rochowanski of the Quincy Institute noted on Twitter:

Then came the second big cable-slicing development: